Jingyi Chen

About Me

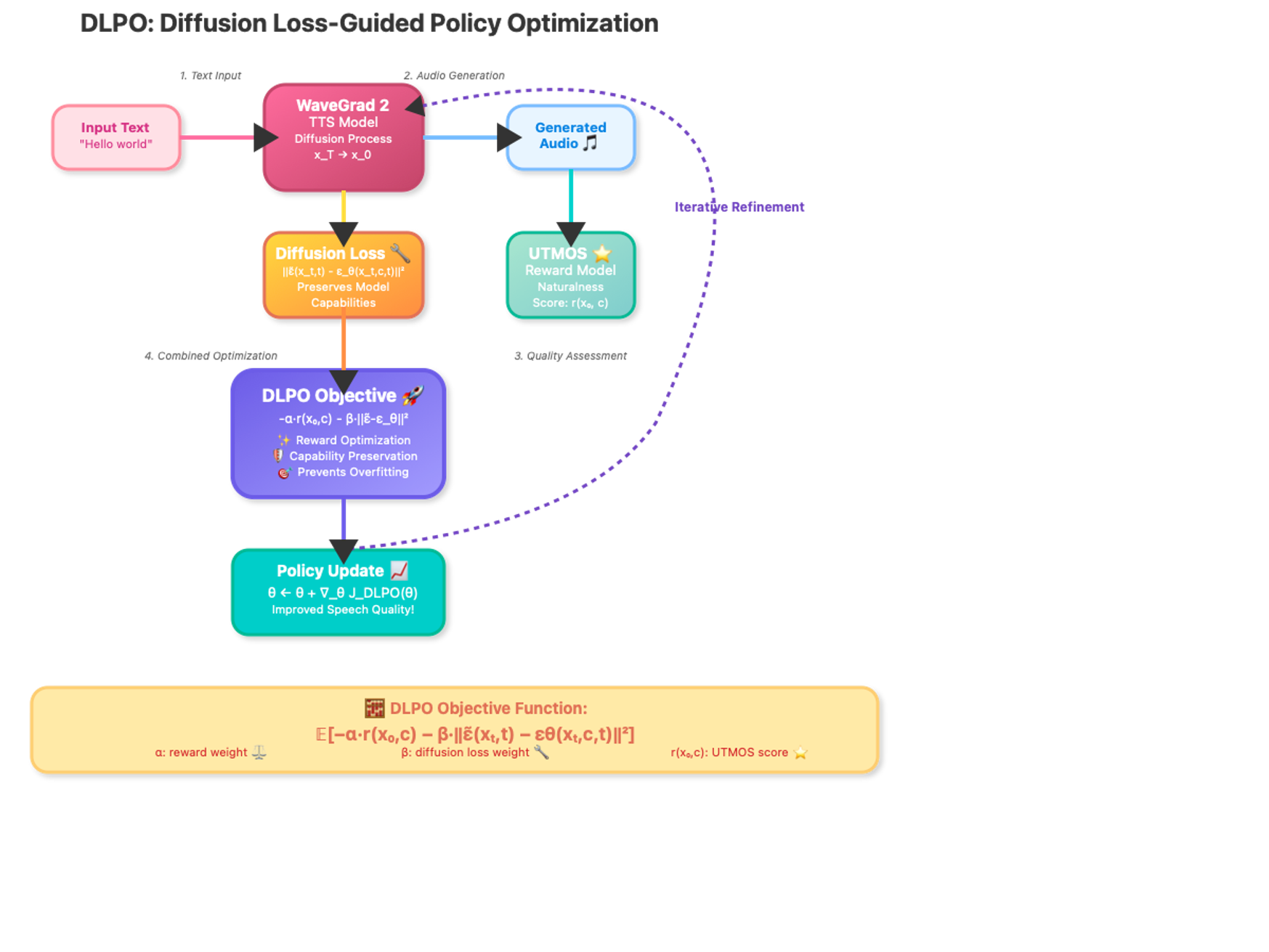

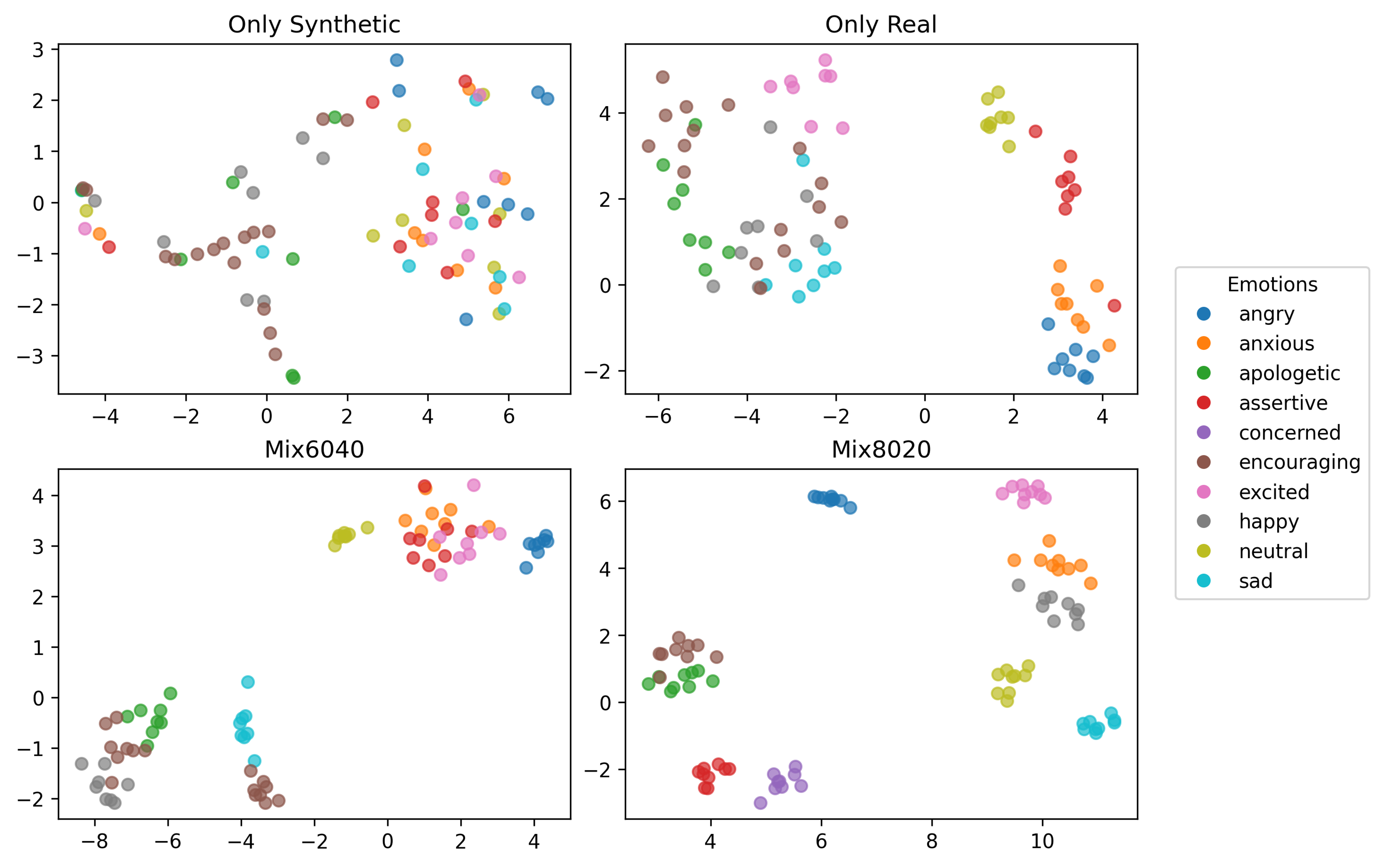

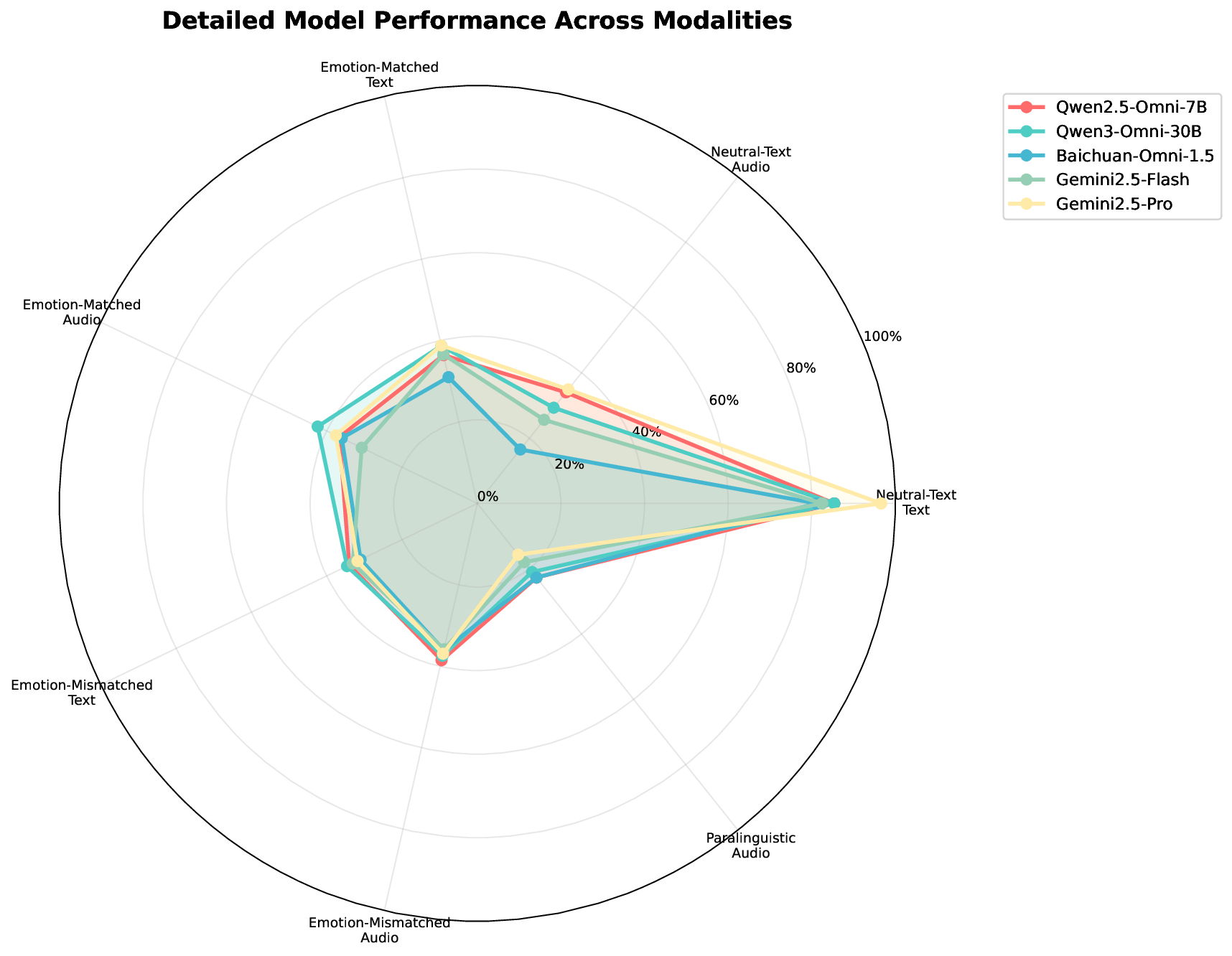

I am a Ph.D. Candidate in Computational Linguistics at The Ohio State University, specializing in speech synthesis, multimodal large language models, and reinforcement learning for audio. I work under the supervision of Dr. Micha Elsner and Dr. Andrew Perrault. My research focuses on advancing speech synthesis through reinforcement learning and diffusion models, developing speech emotion conversion systems, and creating benchmarks for evaluating multimodal LLMs on emotional speech understanding.

Previously, I completed my M.S. in Computer Science & Engineering at OSU and earned my B.A. in Linguistics from Sichuan International Studies University in China. I have industry experience as an Applied Scientist Intern at Amazon, where I developed production-ready speech emotion transfer systems and recommendation models.

Research Interests

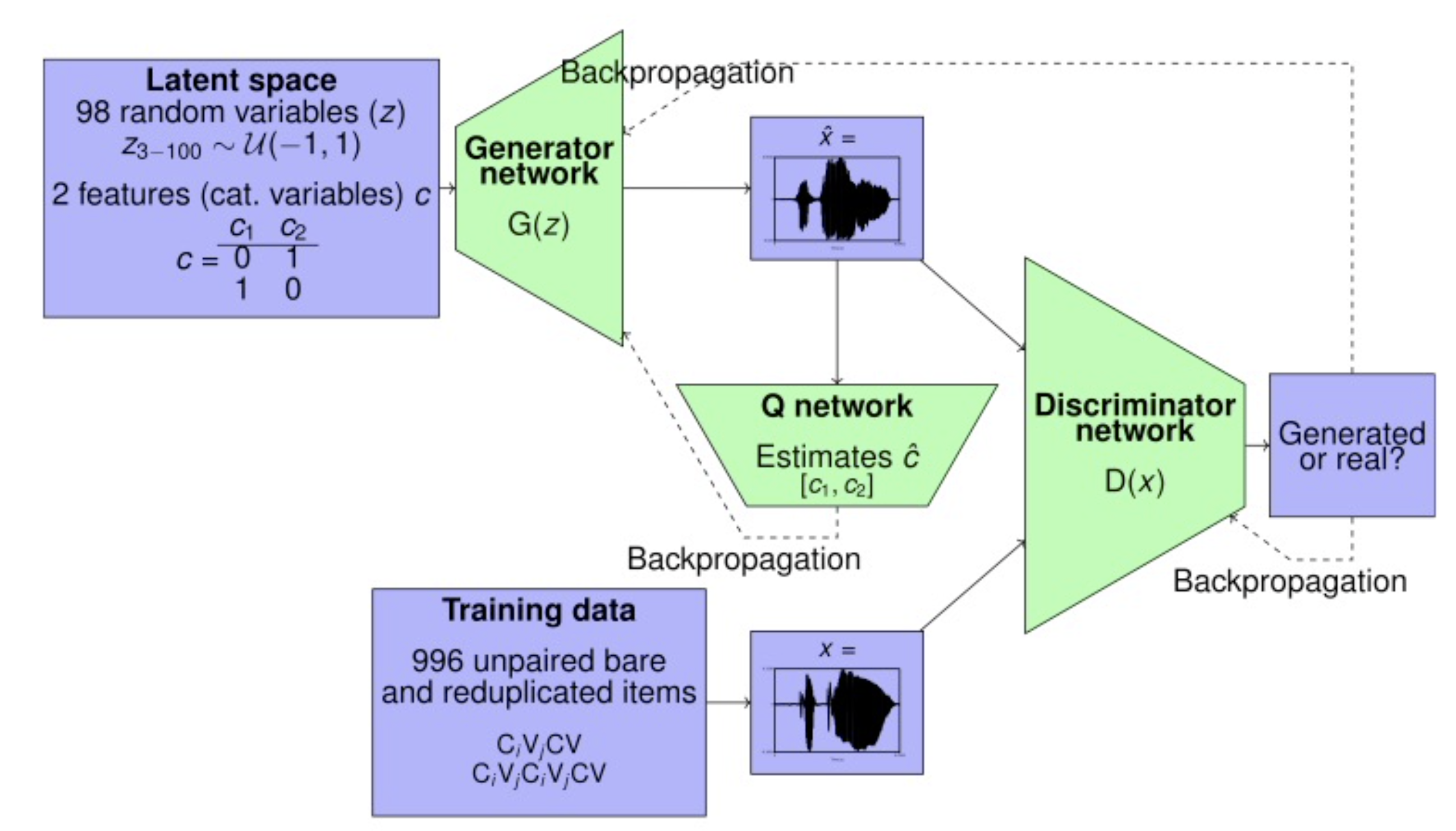

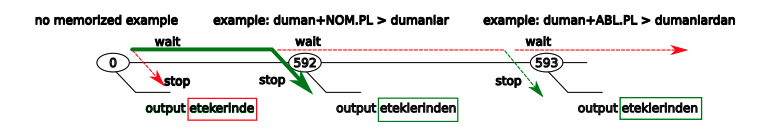

- Speech Synthesis: Text-to-speech systems, diffusion models, emotional speech generation, GANs for speech representation learning

- Multimodal Large Language Models: Speech-text cooperation, instruction tuning, semantic-emotion disentanglement

- Reinforcement Learning for Audio: RLHF, reward-based optimization, model fine-tuning

News

- [Aug. 2025] Started new research project on Social-Emotional Speech Dialogue Benchmark for Multimodal LLMs.

- [May. 2025] Completed internship at Amazon DEX AI, working on LLM-based recommendation systems.

- [Jan. 2025] Released comprehensive emotion transfer dataset with 27K audio samples and published project page.

- [Aug. 2025] Completed internship at Amazon Prime Video, delivered emotion transfer model to production.

- [May 2025] Paper “Reinforcement Learning for Fine-tuning Text-to-speech Diffusion Models” accepted to Interspeech 2025.

- [Aug. 2023] Paper “Exploring How Generative Adversarial Networks Learn Phonological Representations” accepted to ACL 2023 with Area Chair Awards.

Publications

-

Interspeech

Interspeech, 2025.

Interspeech

Interspeech, 2025. -

ACL

Annual Meeting of the Association for Computational Linguistics (ACL), 2023.

ACL

Annual Meeting of the Association for Computational Linguistics (ACL), 2023. -

TTIC Workshop

TTIC Speech & Audio Foundation Models Workshop, 2025.

TTIC Workshop

TTIC Speech & Audio Foundation Models Workshop, 2025. -

CL

Computational Linguistics, 2025.BibTeX Journal Article

CL

Computational Linguistics, 2025.BibTeX Journal Article

Services

Conference Reviewers

International Conference on Learning Representations (ICLR) 2025-2026 AAAI Conference on Artificial Intelligence (AAAI) 2025 Annual Meeting of the Association for Computational Linguistics (ACL) 2025 Annual Conference of the International Speech Communication Association (Interspeech) 2024

Powered by Jekyll and Minimal Light theme.

arXiv

arXiv